[Cite as Williams, Damien P., Heavenly Bodies: Why It Matters That Cyborgs Have Always Been About Disability, Mental Health, and Marginalization (June 8, 2019). Available at SSRN: https://ssrn.com/abstract=3401342 or http://dx.doi.org/10.2139/ssrn.3401342]

INTRODUCTION

The history of biotechnological intervention on the human body has always been tied to conceptual frameworks of disability and mental health, but certain biases and assumptions have forcibly altered and erased the public awareness of that understanding. As humans move into a future of climate catastrophe, space travel, and constantly shifting understanding s of our place in the world, we will be increasingly confronted with concerns over who will be used as research subjects, concerns over whose stakeholder positions will be acknowledged and preferenced, and concerns over the kinds of changes that human bodies will necessarily undergo as they adapt to their changing environments, be they terrestrial or interstellar. Who will be tested, and how, so that we can better understand what kinds of bodyminds will be “suitable” for our future modes of existence?[1] How will we test the effects of conditions like pregnancy and hormone replacement therapy (HRT) in space, and what will happen to our bodies and minds after extended exposure to low light, zero gravity, high-radiation environments, or the increasing warmth and wetness of our home planet?

During the June 2018 “Decolonizing Mars” event at the Library of Congress in Washington, DC, several attendees discussed the fact that the bodyminds of disabled folx might be better suited to space life, already being oriented to pushing off of surfaces and orienting themselves to the world in different ways, and that the integration of body and technology wouldn’t be anything new for many people with disabilities. In that context, I submit that cyborgs and space travel are, always have been, and will continue to be about disability and marginalization, but that Western society’s relationship to disabled people has created a situation in which many people do everything they can to conceal that fact from the popular historical narratives about what it means for humans to live and explore. In order to survive and thrive, into the future, humanity will have to carefully and intentionally take this history up, again, and consider the present-day lived experience of those beings—human and otherwise—whose lives are and have been most impacted by the socioethical contexts in which we talk about technology and space.

[Image of Mars as seen from space, via JPL]

This paper explores some history and theories about cyborgs—humans with biotechnological interventions which allow them to regulate their own internal bodily process—and how those compare to the realities of how we treat and consider currently-living people who are physically enmeshed with technology. I’ll explore several ways in which the above-listed considerations have been alternately overlooked and taken up by various theorists, and some of the many different strategies and formulations for integrating these theories into what will likely become everyday concerns in the future. In fact, by exploring responses from disabilities studies scholars and artists who have interrogated and problematized the popular vision of cyborgs, the future, and life in space, I will demonstrate that our clearest path toward the future of living with biotechnologies is a reengagement with the everyday lives of disabled and other marginalized persons, today.

CYBORGS AND MENTAL HEALTH[2]

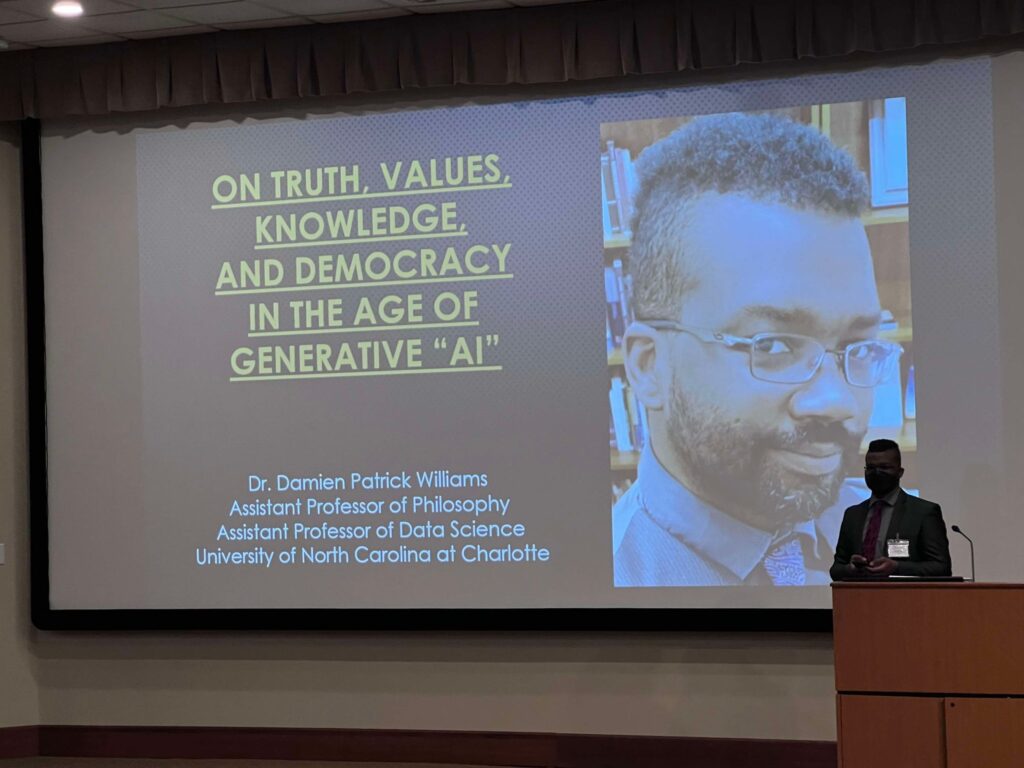

The idea of systematically using technological and biochemical interventions to help a human person regulate their bodily processes to adapt to life in space takes its start from the work of two men: Manfred E. Clynes and Nathan S. Kline. Even at a young age, Clynes’ work always seemed to engage with feedback and modulation and the interplay of different systems, and a correspondence with physicist Albert Einstein encouraged him and gave him the social cache to build a music career.[3] But in 1956, Clynes by chance met Dr. Nathan S. Kline, who was then the Director of the Research Center of Rockland State Hospital, where they both worked.[4] It was through this meeting that the two would become longtime collaborators and would come to coin one of the most resonant technoscientific imaginaries and conceptual tools of the twentieth and twenty-first centuries: The Cyborg.

Oddly, neither Kline’s New York Times (NYT) obituary, nor his biographies at The Nathan S. Kline Institute for Psychiatric Research and the International Network For The History Of Neuropsychopharmacology mention his role in coining the term “cyborg,” even though Clynes was drawn to Kline in large part due to the latter’s work on neurochemistry and the groundbreaking development of antidepressants.[5] Kline’s work on anti-depressants was specifically about the human use of exterior sources of neurochemicals to self-regulate their systems, and it was precisely this work which informed the later dream of unconscious chemical adaptation to any environment. Kline’s work on antidepressants stemmed from his recognition that certain neurotransmitters were not being produced by the brains of patients with certain types of depression. Kline sought a way to either induce the production of these chemicals, or to provide them from an outside source, in doses and at intervals which would allow them to more easily integrate into a human body.[6] Kline envisioned this process as one of the intentional regulation of organic bodily systems, through specific chemical interventions.

Clynes and others saw much wider potential value in this work, especially as in the late 1950’s and early 1960, the United States of America (USA) and the Union of Soviet Socialist Republics (USSR) were in the throes of both the Cold War at the Space Race. Each superpower sought to become the first to put a human being into space, to claim it for their nations and the people therein, and it was in this context that Clynes and Kline wrote the paper “Cyborgs and Space,” and coined the term “Cyborg.”[7] In this paper, Clynes and Kline described a cyborg (a portmanteau of “cybernetic organism”) as a being which would have the means to regulate and alter previously autonomic bodily processes, through the use of chemical alterations, in a cybernetic feedback loop. The paper was largely a theoretical exploration of how we might use chemical biotechnological interventions to regulate autonomic nervous and pulmonary function, and also to make unconscious certain intentional functions and processes.[8] A cyborg would be able to survive the rigors of space travel—such as increased gravitational forces and radiation, long lightless stretches, and bodily degradation—by regulating the chemical processes of their body to adapt to each new situation, as necessary.

But as the Space Race wore on, and more and more humans actually went into space, there was an increasingly smaller focus on the alterations and adaptations that would be necessary to survive in space, and greater public emphasis placed on a narrative of the triumphalism of the human will and ingenuity. The narrative regarding humans in space became primarily about those who had “the right stuff,” rather than a question of what we would have to do in order to adapt and thrive, and so the image of the cyborg fell away and was altered. And a whole suite of possibilities for how we might have understood—and treated—different kinds of embodiment altered, along with it.

As Alison Kafer discusses in her book Feminist, Queer, Crip, feminist and ecological discourses in the 1970’s, 80’s, and 90’s gave rise to widely-read theorists such as Donna Haraway who use the “cyborg” concept in a rhetorical mode that locks it to particular ideals about bodily integrity and outdated notions like “Severe Handicaps.”[9] (Below, we’ll discuss how, in her Robo Sapiens Japanicus, Jennifer Robertson relates this to the Japanese concept of Gotai, or “Five Body.”) Kafer links this discussion to the history of how Kline and Clynes’ work on neurochemical antidepressants at the Rockland Institute was very likely predicated on testing and treating patients—both via drugs and instrumental interventions—against their will. Additionally, throughout the 1940’s, 50’s and 60’s, Rockland was subject to multiple accusations of patient mistreatment and mismanagement, including physical abuse, malnourishment, and even rape.[10] While it certainly might not have be the case that any of those more horrifying things happened under Kline and Clynes’ direction, they did happen on their watch, and the culture of testing institutionalized patients without their consent was widespread in the United States, well into the 1970’s.[11]

[Portrait Image of Nathan S Kline: A monochrome photograph of an older white man with frizzy white hair and a white beard wearing glasses and a white lab coat, white shirt, and cross-hatch patterned tie]

Throughout the long history of eugenics in the United States, ideas about what constitutes the “right kind” of person—be that on the basis of ethnicity, gender, physical or mental ability, or all of the above—led to events in which people institutionalized against their will were forcibly sterilized due to claims of their reduced “fitness” and mental facilities. People with uteri were given forcible hysterectomies and people with testes were chemically or physically castrated, and certain people were simply put to death because they were seen as “unfit” to ever reintegrate with society. All of these things happened starting at very early ages and as one might guess, issues of race complicated every facet of them. As Harriet Washington notes in her book Medical Apartheid:

Unfortunately, a black child is more likely than a white one to have his parent completely removed from the informed-consent equation. Black children are far more likely than whites to be institutionalized, in which case the parents are often unable to consent freely or are not consulted at all.[12]

Often, and to this day, children judged as even possibly having a higher likelihood “mentally unfitness” are just aborted outright, as in the case of Iceland and the Netherlands’ use of in vitro imaging technologies to determine whether or not a child has Down Syndrome.[13]

Kafer’s discussion of the Rockland Institute’s depredations serves to illustrate that even some of the most foundational work and well-respected researchers have been party to monstrous practices in order to make their discoveries. Even as Clynes and Kline’s ideal of the cyborg developed out of a concern for mental health and recognition that the human body was not developed to fit the niche of outer space, they did their work in a context built upon the degradation and predation of disabled and forcibly institutionalized persons. This understanding of marginalized persons as resources to be used or situated embodiments to be emulated has been unfortunately persistent, and it has changed the way we think about what a cyborg ought to be. Rather than being about recognizing that, as researcher of the intersection of philosophy, technology, and disability Dr. Ashley Shew has put it, we would all be disabled in space, and so would all need some form of cybernetic system of interventions to survive, a myth of the elite, perfectible human took root.[14] There are number reasons why that is, and many implications for what it’s come to mean.

CYBORGS AND DISABILITY[15]

In the last three decades, as things like the internet captured the public imagination, more theorists explored the image of the human as increasingly entangled with technology. Theorists of biology, sociology, and scientific history, such as Donna Haraway, came to use the notion of the cyborg as a way to describe how human lives may become entangled with nonhuman entities and systems.[16] At the same time, the understanding of a cyborg as some superhuman fusion of human and machine was being taken up and reinforced by popular culture, creating a what Shew, above, calls “Technoableism” and what anthropologist of robotics and Japanese culture Jennifer Robertson calls “Cyborg-ableism.”[17] In Techno- or Cyborg-Ableism, technologized bodies are ostensibly lauded as “superior,” but only by still being marked out as other.

Shew’s paper “Up-Standing, Norms, Technology, and Disability” explores how ableism, expectations, and particularities of language serve to marginalize disabled bodies.[18] Shew takes her title from the fact that most technological “solutions” designed for people who don’t use their legs are intended to facilitate their engaging the world as if they did. Many if not most things in human societies are designed to be used within a certain range of height that assumes the user is standing; if your default mode is sitting, then your engagement with the vast majority of the world will be radically different. This is just one example of what is known as the social construction model of disability, which says that it’s not the physiological differences themselves which disable, but rather the ways that spaces, architectures, and simple basic societal assumptions limit how a person is expected to intersect with the world and what kind of bodymind they “should” have.[19] Shew notes that, while we tend to think of cyborgs as some seamless integration of technology and bodies, wheelchair and crutch users consider their chairs as fairly integral extensions and interventions, as a part of themselves. The problem is that the majority of societies assume different things about these different modes. Shew mentions a friend of hers:

She’s an amputee who no longer uses a prosthetic leg, but she uses forearm crutches and a wheelchair. (She has a hemipelvectomy, so prosthetics are a real pain for her to get a good fit and there aren’t a lot of options.) She talks about how people have these different perceptions of devices. When she uses her chair people treat her differently than when she uses her crutches, but the determination of which she uses has more to do with the activities she expects for the day, rather than her physical wellbeing.

But people tend to think she’s recovering from something when she moves from chair to sticks.

She has been an [amputee] for 18 years.

She has/is as recovered as she can get.[20]

Shew is one of many researchers who have discussed that a large number of paraplegics and other wheelchair users do not want exoskeletons, and that those fancy stair-climbing wheelchairs aren’t covered by health insurance, because they’re classed not as assistive devices, but as vehicles. Shew says what most people who don’t have use of their legs want is to have access to the same things that people who do have the use of their legs have. Because ultimately, in around the time it takes for Apple to come out with a new iPhone—around about eighteen months—a person who has developed a disability—lost the use of their legs, the use of their sight, the use of their hearing, the use of their arms, whatever—will come to engage and to adapt to that new lived physical reality as normal. Many societies think about disability as a life-altering, world-changing thing—something that lasts forever and nothing will ever be the same for you—but the fact the matter is that humans are plastic, adaptable, and malleable. We learn how to live around what we are, and we learn it very quickly.

All of this comes back down and around to the idea of biases ingrained into social institutions. Our expectations of what a “normal functioning body” is gets imposed from the collective society, as a whole, a placed as restrictions and demands on the bodies of those whom we deem to be “malfunctioning.” As Shew says, “There’s such a pressure to get the prosthesis as if that solves all the problems of maintenance and body and infrastructure. And the pressure is for very expensive tech at that.”

Humans became seen as those creatures which self-analyze and then alter and adapt themselves based upon said self-analysis. Many philosophers of technology have argued that we are always technologically mediated, and that that mediation shapes and is shaped by our physiological and sociocultural experiences, and elsewhere, I’ve explored the questions of identity that come along with Ship-of-Theseus-like questions of bodily integrity that do not quite fit into this work.[21] Suffice it to say that even as promises of becoming “more than” human have flooded the public imagination, they have been met with equally ardent cries of “but if you lose a part of your body, you’re not really you!” Either of these positions serves only to erase and marginalize the real lived experiences of disabled people, for the sake of some assumption about what the human bodymind “should” or even just might be. Even into the twenty-first century, cyborgologists such as Amber Case, a self-described “Cyborg Anthropologist,” have argued that, thanks to augmented reality, smart phone devices, and the generally ubiquitous integration of technology in to the daily life of the modern human being, “We Are All Cyborgs Now.”[22] But something crucial gets lost, here, when we obfuscate or elide the real experiences of people with disabilities from the conversation about cyborgs and cybernetics.

In her pieces “Dawn of the Tryborg” and “Common Cyborg,” Jillian Weise specifically hones in on a great deal of the foundation for the modern mythology of cyborg experience, including that which comes out of perspectives like Haraway’s and Case’s.[23] The idea that anyone with a smartphone or with a particular conceptual relationship to the world is automatically a cyborg, Weise says, does violence to the very real lived experience of people with prosthetics or artificial organs or implants that keep them alive. Those latter interventions need maintenance to keep them functional in the face of damage, to prevent life-threatening infection, and to adjust them for day-to-day changes, and while they are not necessarily “sexy,” they are a truer example of what the term’s originators thought it would mean to be a cyborg. “Tryborgs,” on Weise’s view, are those people who want all the glitz and glory of being interconnected with technology, without any of the practical implications. They are the transhumanists who believe that we will all be able to upload our consciousnesses and change our shape, at will, with no muss and no fuss. They want to be the inspirational figures, without having to suffer any losses or do any of the messy upkeep and maintenance, to get there. And they exist in many cultures.

[Port-A-Cath Chemo Port (Images from Cancer.gov {Left} and MySamanthaJane.com {Right})]

Jennifer Robertson’s Robo Sapiens Japanicus consists of a close investigation of Japan’s historical cultural engagement with robots, and her sixth chapter, “Cyborg-Ableism Beyond The Uncanny (Valley),” deals specifically with Japanese notions of disability, mental health, and cyborg-ableism.[24] Though she doesn’t directly consider of the roots of the cyborg concept, beyond Haraway and back to Kline and Clynes, Robertson delves into things like the removal of disabled veterans from streets for 1964 Olympics, the creation of the first Paralympics in 1948, the fact that one out of six people in Asia and the Pacific is born with some form of disability, and that Japan only ratified the UN’s and drafted its own disability protection legislation after many years and a great deal of foreign pressure.[25] And even with that pressure, Robertson says, it was only with the 2016 enforcement of these laws that all governmental institutions and private-sector businesses were required to remove the social barriers for people with disabilities.[26] Before then, many disabled athletes weren’t allowed to train with able-bodied teammates, and had to raise their own money to purchase prostheses, at which point many of them, if they were successful, got accused of exploiting their disability for monetary gain.[27] In this way, Robertson highlights a cultural indifference to or dismissal of disabled people, even as governments and businesses focused on and developed robotic prostheses.[28]

In a cultural sense, the desires to either fit in or to use technology to become “more” and “better than” are what tend to drive cyborg-ableist concerns. Robertson discusses Tobin Siebers and the concept of able-bodied passing, comparing it to queer folx and “straight passing;” in each case there are transitive and intransitive forms of passing, where one is either actively effacing their difference/otherness, or merely benefitting from outside observers simply not recognizing said.[29] To that end, many may choose to make their disability (or their queerness, or both) unignorable by way of stylized prostheses; in fact, much in line with Shew’s assertions above, while people who’ve recently lose a limb may start off wanting a lifelike replacement, they tend to shift to wanting something that works and feels better, rather than just looking a particular way. [30] So are stylized prostheses better understood as empowering or distracting? On the one hand, there is something empowering about the use of a prosthetic to reshape and change the way the outside world can understand you; on the other hand, “prosthetics can divert attention from the disabled limb to its replacement.”[31] But this replacement, in itself, can be a source of discomfort for able-bodied folx.

In the section “What is (and is not) the uncanny valley?” Robertson explores Masahiro Mori’s concept of Bunkimi no tani which Robertson translates as “the valley of eerie feeling,” rather than the more familiar “uncanny valley.”[32] Paired with shinwakan no tani or “familiar feeling valley,” Mori describes this as a kind of suddenly and shockingly frustrated expectation, when one is in the process of encountering and reinforcing increasingly familiar things. This concept depends heavily on Mori’s assumptions about what would constitute an “average, healthy, person” and what Robertson labels his “almost callous indifference toward disabled persons.”[33] In Mori’s graphs and descriptions of the Valley, he includes sick disabled people as on the upward curve of the “eerie,” moving away from corpses, zombies, and prosthetic hands.[34]

While many people have taken the uncanny valley as some kind of gospel law, Robertson contends we should, rather, expect that the constituency or even presence of an uncanny valley would be a highly subjective thing, based on factors such as “physical and cognitive abilities, age, sex, gender, sexuality, ethnicity, education, religion, and cultural background;” and, indeed, Mori himself has said that it was meant only as an “impressionistic” guide.[35] Humans can adjust to and come to accept and embrace the unfamiliar and designers can avoid the uncanny valley, and many people on earth live in situations where injury illness and death are not “sudden and unfamiliar” or “eerie,” but rather are unfortunately everyday occurrences. But Mori’s response, and much of what is seen in the Japanese exoskeleton market, is just another example of Gotai, the traditional Japanese understanding that a “whole” or “normal” body is made of five constituent parts in combination: either the head, two arms, and two legs, or the head, neck, torso, arms, and legs.[36] This theory holds that anything that breaks this form breaks the person, a perspective which firmly binds these notions of “completeness” to notions of mental health.

Hirotada Ototake’s book Gotai Fumanzoku or “incomplete/unsatisfactory body” (English title: “No One’s Perfect”) is an autobiography about his tetra-amelia syndrome which prevented his arms and legs from developing during his gestation; stressing his “Normality” and his desire to be treated equally.[37] But, Robertson notes, the kind of whole-body championed by the Japanese culture exoskeletons are not ways for people like Ototake to regain Gotai, and that there’s a difference between prosthetics that replace a limb and those that “enhance” an existing but disabled one.[38] Robertson, here, in a move similar to but not directly referential of Kafer, touches on Haraway’s use of cyborg as a metaphor for relationality and reflexivity, and, offers a critique of Haraway’s seeming to conceive of “disability” as a singular category rather than the multiform variable conditions that can be linked under this label.[39] This, along with transhumanists like Max More and Natasha Vita-More’s ableist notions of what the “perfect” body should be, feeds into narratives that comprise this vision of cyborgs as a somehow “perfected” humanity.

But cyborgs were conceived as a means for humans to live in space, a situation which, again, would be a combination of constantly-dangerous processes of keeping close track of minute changes in the bodyminds of the astronauts and their relationship to their environment—processes that are already well-known to, e.g., diabetics or people with peripheral neuropathy. For a person within those lived experiences, always being aware of the state, position, and integrity of their body is always already a life-or-death scenario, in ways that have to be learned and mimicked by people who are otherwise able-bodied. Had we maintained disabled people’s stories as a part of the mythology of the cyborg, from the beginning, Western societies might now have a better relationship with concepts of disability and mental health. This relationship might have easily arisen from the recognition that most if not all disabled people are cyborgs, just as all spacefaring humans must become cyborgs, and that this, as Clynes and Kline understood, is precisely because all spacefaring humans will become disabled by the very act of existing in space. Which means that, in essence, spacefaring humans currently do and will continue to experience the social construction of disability.

[Members of the Gallaudet Eleven chat in the zero-g aircraft that flew out of Naval Air Station in Pensacola, Fla.; Credits: U.S. Navy/Gallaudet University collection]

But since we have not, in fact, reinforced that chain of understanding, contemporary theorists would be well served to presently explore the situated and lived experiences of people with different configurations of bodyminds, and to listen to what they know about themselves. As Shew has noted, those people who have experience with orienting themselves to the world via pushing off of surfaces or using their arms as primary means of propulsion would be better positioned move in weightless environments and to teach others new strategies to do the same. Because, ultimately, people with disabilities are often already interwoven with their technologies, in ways idealized by technologists, but their lived experience is not recognized and appreciated for what it is. If we take these lived experiences and incorporate the people who embody them, in conjunction with the original intent of the notion of the cyborg, we might have the beginning of a system by which we can rehabilitate the notion of the cyborg—but overcoming the historical trends that have led us here will take a great deal of work.

CYBORGS AND MARGINALIZATION

While it has long been assumed that the future of humanity would have to adapt both its forms and conceptual relations to multiform and multimodal embodiments, through our explorations we have come to understand how the category of the cyborg, which should have made fertile grounds for this expanded understanding, has instead become a site of disenfranchisement. As we’ve seen, Kafer’s project in Feminist, Queer, Crip aims to reframe disabled people as cyborgs because of their political practices rather than their bodies, that enframing of politics, embodiment, and biotechnological intervention has roots and mirrors in other persistent forms of marginalization. Those other roots of racism and misogyny give rise to several questions such as, “Whose bodies will we make subject to or deign to include in tests for space exploration?” More to the point, if we are meant to the cyborg in terms of people whose embodiments are already technopolitically mediated, then who can and should we understand as cyborgs, now? Because there is a crucial difference between a group of people who have “disnormalized” themselves, and group which has been othered by people who don’t know or understand their lived experience.

Again, there are multiple sites of marginalization which can be demonstrated as having a force-multiplying effect on how people with implants, prostheses, or biochemical injection or ingestion regimens are either accepted or disenfranchised by the society in which they live. We can borrow, here, the framework of Kimberlé Williams Crenshaw’s Intersectionality theory, to help make sense of this:

…problems of exclusion cannot be solved simply by including Black women within an already established analytical structure. Because the intersectional experience is greater than the sum of racism and sexism, any analysis that does not take intersectionality into account cannot sufficiently address the particular manner in which Black women are subordinated. (Emphasis added.)[40]

Crenshaw centers Black women, here, but this isn’t to say that only Black women can be intersectional subjects. Rather, she uses Black women as an example of how groups of people that have been cast as only one kind of identity (Black, Woman) would be far better understood as the center of an intersectional process. Might we think of trans folx who sit at the center of their identities, biomedical technologies such as hormone replacement therapies (HRT) or binders or packers, of societies expectations about how their bodies ought to present and behave, and public technologies such as airport scanners of as cyborgs?[41] If so, they would have vastly different valences of legibility and operation than, say, a diabetic with an insulin pump—though similar ones to a person with an ostomy bag.[42] If we work to understand people in an intersectional way, we can recognize the many vectors for different kinds of oppression, in the world, and understand that even those intersectional subjects with shared component roots will have different particularities of expression and avenues by which we might redress their needs—a recognition that has been sorely and consistently lacking in much of our public discourse, to date.

When we again explore the histories of eugenics and medicalization, we find that even up to this point in the 21st century, there are well-regarded researchers and even textbooks on biomedical ethics which barely touch on these issues, let alone on understanding them through a lens of intersectionality of oppression. For instance, Francis L. Macrina’s Scientific Integrity is in its fourth edition, and yet still seems to lack any substantive contextual discussion of changes made in the history of research ethics standards and practices—such as what actually happened in the Tuskegee syphilis trials. Macrina mentions that the trials took place, and even the nature of the population on which they were conducted, but he does not at any point mention the fact that researchers targeted the study’s population because they were Black, and were therefore conceptualized as resources.[43] While it is, perhaps, unfair to expect Macrina to touch on every nuanced concerns of every human subject trial, the assumption that social features are not worthy of discussion serves to reinforce a whole host of other assumptions about things like the objectivity of testing criteria or the clarity of explanations in gaining informed consent. These assumptions, if ever scrutinized at all, would simply not hold up. At the very least it is clear that the Tuskegee patients, like Henrietta Lacks, were not seen or understood as being worthy of clear explanations of what was being done to them. After all, if they understood, they might have said “no.”

Focusing on the history of biomedical experimentation on populations of the forcibly institutionalized or systemically disenfranchised, and African American or female-presenting bodies, in particular, would do wonders to highlight the fact that the long-term effects of the trials were more than just some blanket distrust of medical experimentation, throughout American society. The trials in Tuskegee, Alabama fit into a longstanding pattern of treating Black bodies as resources to be used and as objects to be othered, dehumanized, and intervened upon in whatever ways the dominant society at the time has happened to see fit. And Black bodies are not the only ones. Imagine if textbook writers such as Macrina more often took the time to discuss and contextualize events like how the government and medical providers tricked Black people in Mississippi into receiving vaccinations, or the forced sterilization of Black women, or how the intersection of mental health and institutionalization of women in general led to them being experimented on and sterilized at higher rates, or the long-term ethical and social implications of classifying certain people as “morons.”

More and more, the effects of these kinds of historical objectification are understood as linked to lowered health outcomes, higher rates of chronic illness, and greater morbidity for Black people and women in the United States, and a longstanding history of thinking of the neurodivergent and people with mental disabilities as “less than.” The omission of these discussions from textbooks and other broad public discourse exemplifies a persistent failure to fully contextualize the history and implications of these events. That this failure presents in so many ethical sub-disciplines might help to explain how people have so often managed to convince themselves that testing on marginalized populations without their informed consent can be said to serve the “greater good.” More often than not, “professional ethics training” or any other kind of take on the humanities within business or the so-called hard sciences becomes synonymous with a particular understanding of how not to get sued. The perspectives that get passed along are those of experts in the field in question, be it business, technology, medicine, or what-have-you. Leaving the social science and humanities training of students to people who were only ever trained in this narrow, subdisciplinary fashion is precisely what leads to the continual dismissal of ethical, moral, and sociopolitical considerations, and said dismissal then, in turn, gives rise to Technoableism.

If various groups want to change bodily forms and embodiments, or even just change the way that we all interact with the planet on which we currently live so that we might survive the next 30 years, then they will have to radically reconsider how our sociopolitical forces and the elements of our lived experience impact the decisions we make about the science we do and tools we create. The historical positioning of the lived experiences of marginalized people in terms of race, gender, disability, and so on has meant that while we are more than happy to test and degrade certain people for their embodiments, we have been less than willing to allow those same to shape and direct the technoscientific discourse of which they have forcibly been made a part. This distinction, though unarticulated, matters a great deal, and its effects and implications run rampant throughout every facet of our society.

CONCLUSION

If humans do manage a future in which they travel into and live in space, they will need to change the kinds of embodiments and relations they have in order to survive; to do this, they will need to think in vastly different ways about the nature of technological and scientific projects they undertake. Our societal future imaginings are rife with assumptions about what kind of people are best suited to exist and these have been shaped by the historical positioning and treatment of many marginalized groups. Left unexamined, these assumptions and precedents will likely mutate and iterate into each new environment into which humans spread, and affect every engagement of human and nonhuman relationships. But, if we bring a careful, thorough, and intentional consideration to bear on the project of weaving together biomedical, interpersonal, sociopolitical, and technomoral concerns, then we might be better suited to both do right by those we’ve previously oppressed and agilely adapt to the kinds of concerns that will face us, in the future.

As Haraway discusses in her (flawed but possibly still salvageable) “Cyborg Manifesto,” the language of the cybernetic feedback loop does not belong only to humanity as a way to describe its own processes—cybernetic theory and the myth of the cyborg are also frameworks which can be used to describe the cycles and processes of nature, as a whole.[44] Through this understanding, Haraway and others have argued that all of nature is involved in an integrated process of adaptation, augmentation, and implementation which, far from being a simple division between the biological and technological is, instead, a reflexive, co-productive process. Using the theorists and examples above, I’ve argued for an understanding of biotechnological intervention and integration as the truth of our existence with and within technology. Our bodies and minds are shaped by each other and exist as bodyminds, and those bodyminds dictate and are shaped by the technologies with which they interact.

In order to carefully construct and live within vastly complex systems, it will be crucial to understanding the lived experiences of those whose embodiments and bodyminds have placed them at a higher likelihood of being marginalized by those who demand a “right kind” of lived experience. Only by allowing them to create a world out of the lessons of their lived experience will we be better able to intentionally craft what this system and its components will learn and how they will develop. What should characterize our understanding of the cyborg, then, is the reflexive, adaptive relationship between the sociotechnical, sociopolitical, ethical, individual, symbolic, and philosophical valences of our various lived experiences.

The point in saying that “Cyborgs Have Always Been About Disability, Mental Health, and Marginalization” is not to say that the category of the cyborg should be Disclosed to cyborg anthropologists and philosophers who say “we have always been cyborgs.” Rather, it’s about highlighting the fact that a category which was invented specifically to address the lived experiences of marginalized and oppressed people has been co-opted and transformed into a tool by which to erase the experiences of those very same people. We can, and indeed should, still make use of the Harawayan cyborg, the metaphor for entanglement and enmeshment, both as individuals and communities, but we must do so in a way that honours both the original meaning and the evolution of the concept. We must recognize that disabled people, the neurodivergent, trans folx, Black lives, women, queer individuals, and those who sit at the intersection of any number of those components comprise individual lives and communities of experience which are already attuned to changing and adapting to suddenly hostile environments, and it is these kinds of lives which should stand at the vanguard of how we understand what it means to be a cyborg, moving forward. Because the concept of the cyborg was never about a perfectible ideal, it was always about survivability, about coming into a new relational mode with ourselves, our society, and our world.

[1] “Bodyminds” comes from Margaret Price’s “The Bodymind Problem and the Possibilities of Pain.” in Hypatia 30, 2015.

[2] Parts adapted from Williams, Damien Patrick, “A Brief Historical Overview of Cybernetics and Cyborgs,” written for History of STS, Spring 2018

[3] Clynes, Manfred. (1955-10-02). “Simple analytic method for linear feedback system dynamics”. Transactions of the American Institute for Electrical Engineers

[4] Madrigal, Alexis C.. “The Man Who First Said ‘Cyborg,’ 50 Years Later.”

[5] Gruson, Lindsey. “Nathan Kline, Developer of Antidepressants, Dies.” The New York Times. February 14, 1983. https://www.nytimes.com/1983/02/14/obituaries/nathan-kline-developer-of-antidepressants-dies.html; Blackwell, Barry. “Nathan S. Kline.” International Network For The History Of Neuropsychopharmacology, June 13, 2013. http://inhn.org/profiles/nathan-s-kline.html.

[6] Ibid.

[7] Clynes, Manfred E. and Kline, Nathan S. “Cyborgs and Space.” Astronautics (September 1960), 26-27, 74-76. http://web.mit.edu/digitalapollo/Documents/Chapter1/cyborgs.pdf

[8] Clynes and Kline “Cyborgs and Space.”

[9] Kafer, Allison. Feminist, Queer, Crip. Bloomington: Indiana University Press, 2013. pg. 105, 111—115; Haraway, Donna. “The Cyborg Manifesto: Science, Technology, And Socialist-feminism In The Late Twentieth Century.” Simians, Cyborgs And Women: The Reinvention Of Nature New York; Routledge. 1991.

[10] Kafer, Allison. Feminist, Queer, Crip. pg. 126—128

[11] Cf, Washington, Harriet. Medical Apartheid; Tuskegee University, “About the USPHS Syphilis Study.” https://www.tuskegee.edu/about-us/centers-of-excellence/bioethics-center/about-the-usphs-syphilis-study; Skloot, Rebecca. The Immortal Life of Henrietta Lacks; New York: Crown Publishers, 2010

[12] Washington, Harriet. Medical Apartheid: The Dark History of Medical Experimentation on Black Americans from Colonial Times to the Present. New York: Doubleday, 2006. pg. 293

[13] Verbeek, Peter-Paul. “Obstetric Ultrasound and the Technological Mediation of Morality: A Postphenomenological Analysis.” Human Studies, Vol. 31, No. 1, Postphenomenology Research (Mar., 2008), pp. 11-26; Springer. http://www.jstor.org/stable/40270638

[14] Shew, Ashley. “Technoableism, Cyborg Bodies, and Mars.” Technology and Disability. November 11, 2017. https://techanddisability.com/2017/11/11/technoableism-cyborg-bodies-and-mars/.

[15] Parts adapted from Williams, Damien Patrick, “Technology, Disability, & Human Augmentation,” https://afutureworththinkingabout.com/?p=5162; “On the Ins and Outs of Human Augmentation,” https://afutureworththinkingabout.com/?p=5087.

[16] Haraway, Donna. “The Cyborg Manifesto”

[17] Shew, Ashley. “Technoableism, Cyborg Bodies, and Mars”; Robertson, Jennifer. Robo Sapiens Japanicus; Robots, Gender, Family, and the Japanese Nation. Oakland, CA: University of California Press, 2018.

[18] Shew, Ashley. “Up-Standing Norms.” IEEE Conference on Ethics and Technology, 2016.

[19] See Rosenberger, Robert. “The Philosophy of Hostile Architecture: Spiked Ledges, Bench Armrests, Hydrant Locks, Restroom Stall Design, Etc.” 2018.

[20] Shew, Ashley, in correspondence, 2016.

[21] Cf. Don Ihde, Albert Borgmann, Peter-Paul Verbeek, Evan Selinger, and other post-phenomenologists; Williams, Damien Patrick “Technology, Disability, & Human Augmentation,” “On the Ins and Outs of Human Augmentation,” “Go Upgrade Yourself,” appearing in Futurama and Philosophy, Courtland D. Lewis ed.

[22] Case, Amber. “We are all cyborgs now.” TED Talks. December 2010. http://www.ted.com/talks/amber_case_we_are_all_cyborgs_now.html

[23] Weise, Jillian “The Dawn of the ‘Tryborg.’” November 30, 2016. NEW YORK TIMES. https://www.nytimes.com/2016/11/30/opinion/the-dawn-of-the-tryborg.html?_r=1#story-continues-1; “Common Cyborg.” Sep 24, 2018. GRANTA. https://granta.com/common-cyborg/; Also Cf. Joshua Earle’s “Cyborg Maintenance: A Phenomenology of Upkeep” presented at the 21st Conference of the Society for Philosophy and Technology.

[24] Robertson, Jennifer. Robo Sapiens Japanicus; Robots, Gender, Family, and the Japanese Nation. pg. 146—174

[25] Robertson. pg. 146

[26] Ibid. pg. 148

[27] Robertson. pg. 149

[28] Ibid.

[29] Ibid. pg. 150

[30] Ibid. pg. 152

[31] Robertson. pg. 150

[32] Ibid. pg. 153—154

[33] Ibid. pg. 155—156

[34] Ibid. pg. 157

[35] Ibid.

[36] Robertson. pg. 168—169

[37] Ototake, Hirotada. Gotai Fumanzoku (“Incomplete Body”). Tokyo: Kodansha. 1998; No One’s Perfect. Tokyo: Kodansha. 2003.

[38] Robertson. pg. 170—171

[39] Ibid.

[40] Crenshaw, Kimberlé Williams. “Demarginalizing the Intersection of Race and Sex: A Black Feminist Critique of Antidiscrimination Doctrine, Feminist Theory and Antiracist Politics.” https://philpapers.org/rec/CREDTI

[41] Hoffman, Anna Lauren. “Data, Technology, and Gender: Thinking About (and From) Trans Lives”

[42] Dowd, Maureen. “Stripped of Dignity.” New York Times. April 19, 2011. https://www.nytimes.com/2011/04/20/opinion/20dowd.html; Crawford, Alison. “Disabled passengers complain of treatment by airport security staff.” CBC News. Sept. 27, 2016. https://www.cbc.ca/news/politics/catsa-airport-travellers-complaints-security-1.3779312.

[43] Macrina, Francis L. Scientific Integrity: Text and Cases in Responsible Conduct of Research. (Third Edition). Washington, D.C.: ASM Press, 2005. pg. 92.

[44] Haraway, Donna. “The Cyborg Manifesto.” Simians, Cyborgs And Women: The Reinvention Of Nature.

![Screenshot of ChatpGPT page:ChaptGPT Promo: 2 months free for students ChatGPT Plus is now free for college students through May Offer valid for students in the US and Canada [Buttons reading "Claim offer" and "learn more" An image of a pencil scrawling a scribbly and looping line] ChatGPT Plus is here to help you through finals](https://cdn.bsky.app/img/feed_fullsize/plain/did:plc:ybkylffhwhn2an2ic2lxh76k/bafkreidh6mhffosfxhbgnxx6aybjycvgj3c2ygzto2xhzvsohdsv3g6evm@jpeg)