Back in October, I was the keynote speaker for the Society for Ethics Across the Curriculum‘s 25th annual conference. My talk was titled “On Truth, Values, Knowledge, and Democracy in the Age of Generative ‘AI,’” and it touched on a lot of things that I’ve been talking and writing about for a while (in fact, maybe the title is familiar?), but especially in the past couple of years. Covered deepfakes, misinformation, disinformation, the social construction of knowledge, artifacts, and consensus reality, and more. And I know it’s been a while since the talk, but it’s not like these things have gotten any less pertinent, these past months.

As a heads-up, I didn’t record the Q&A because I didn’t get the audience’s permission ahead of time, and considering how much of this is about consent, that’d be a little weird, yeah? Anyway, it was in the Q&A section where we got deep into the environmental concerns of water and power use, including ways to use those facts to get through to students who possibly don’t care about some of the other elements. There were a honestly a lot of really trenchant questions from this group, and I was extremely glad to meet and think with them. Really hoping to do so more in the future, too.

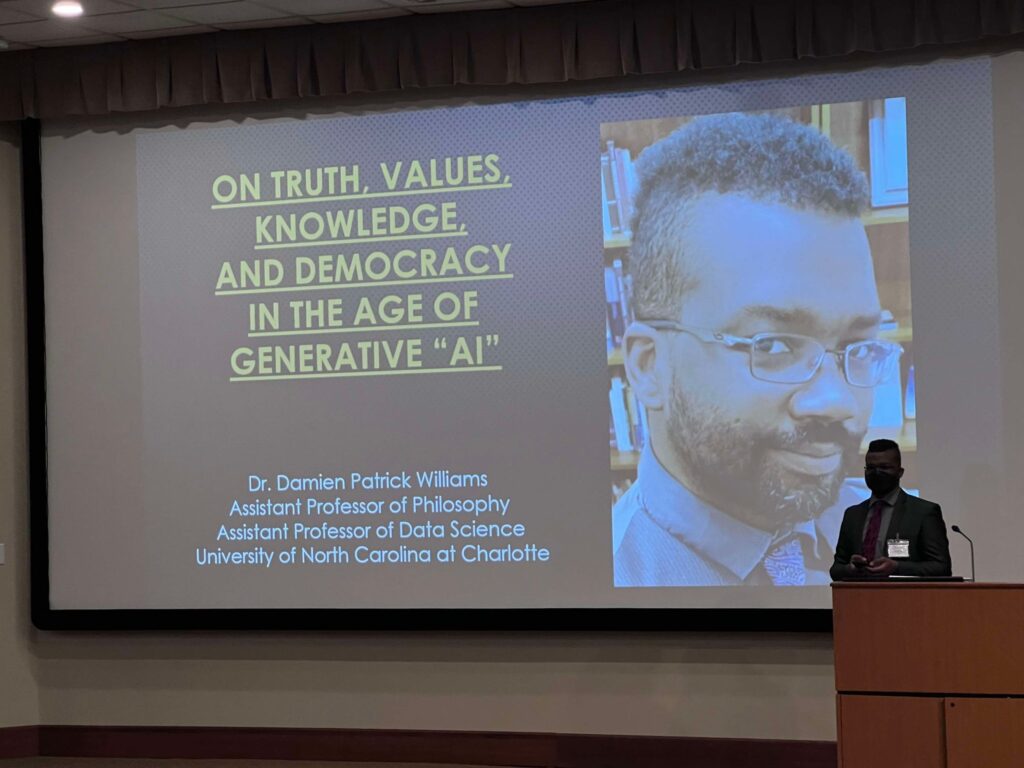

Me at the SEAC conference; photo taken by Jason Robert (see alt text for further detailed description).

Below, you’ll find the audio, the slides, and the lightly edited transcript (so please forgive any typos and grammatical weirdnesses). All things being equal, a goodly portion of the concepts in this should also be getting worked into a longer paper coming out in 2025.

Hope you dig it.

Until Next Time.

Audio:

Slides:

DPW_-_TVKD-GenAITranscript:

0:03

I’m going to turn this mic on because I’m probably going to wander a little bit, and this will help make sure that everybody can still hear me, even as I do.

Thank you very much for that introduction. I really appreciate it. I really appreciate all of you for being here today. I appreciate you taking your time and joining me at lunch. This is going to be a talk that’s going to talk about the kind of sociotechnical values and impacts of “AI” generative, “AI” in particular, more than it talks about the specific technical details of “AI”. That being said, one of the things that we are going to talk about, just to get us started, is going to be a little bit, a very little bit, about how generative “AI” works when we talk about things like GPTs, large language models, or any number of other things that we put under the header of “AI” writ large, what we are talking about is in a very real sense of kind of a system of interoperable algorithms that are used to weight, arrange, sort and return large associations of language large language models are the kind of overarching framework under which things like GPTs to text based transformers for OpenAI, things like Google, Gemini, these Are the frameworks on which they work.

Large language models can also be applied to things like text generators. They can be applied to video generators, and in the process of doing that, what they do is they take that large language of large language batch of associated data, and they then frame it and associate it, tag it to video images or audio with which they have also been trained. And so the process is about creating a greater fidelity of statistical correlation between text and text, text and image, text and video or text and audio. In order to do any of this work, what you have to do is you have to have human beings go in and also engage in a process of what’s known as reinforcement training. That reinforcement process oftentimes requires a large group of humans to teach these systems whether they’ve gotten it right or whether they’ve gotten it wrong, and then to nudge them closer and closer towards getting it right. The question of, “What does right mean in this context and who is doing that training?” undergirds all of this. The data that large language models use can be in the billions or now even trillions, of tokens large. And when we say token, what we mean in that space is a word or a phrase.

The word “token” is used to talk about a word or discrete chunk of language which is associated in a particular way. At the top of this slide, what I have is what is a very simple vector map. It is a gift that shows the associations between certain words within a vector space. This is how the original Forerunner language models things like GLoVe or Word2Vec which were used up until about 2016 as the kind of cutting edge of language modeling in the “AI” space. This is how you could see them doing the work that they do. And if you take a look at the particular map that I’ve provided here, the one that I’ve chosen that I use for most of the conversations I have around this space, you’ll probably notice a very particular set of associations happening in this you see the word man and the word King are mapped in a vector space. That is, they are related to each other closely. When you plot out how words are connected to each other, what connotations words have, these show up very near each other, and the word woman and the word King show up very near each other in that vector space. The thing of it is, is that when you look at the vector space, the word “woman” doesn’t just appear next to the word “king”— or “queen,” rather, and vice versa.

The word “woman” appears next to the word “secretary,” very closely in the vector space, the word “nurse,” the word “teacher.” And it appears very distant from the words president, doctor, CEO. This is a visual representation of how biases get embedded within language modeling systems, the data on which they are trained comes from sources which have those biases encoded in them. This has become a kind of a standard understanding throughout large language model systems over the past three to five years, biased training data gets biased results, but many people don’t know that the biased training data that we’re dealing with, in many cases, is decades old, because the thing about training data and the thing about training modeling systems is that it costs money Large language systems and language models before them use what are known as language corpora. It’s huge rafts of text that are designed to give a system something to work with, something that can easily be tokenized, picked apart and associated within itself for the system to learn from. And these language corpora have to be built. They have to be put together out of existing data, because the goal in all of this is natural language.

This diagram at the bottom comes from OpenAI directly. So take it with a grain of salt. But the idea is that the system is prompted. It is used in an untrained way, that untrained result is then compared to the desired output. It is then retrained toward it, and then that is put into practice to generate new results. It’s a feedback system. That’s what reinforcement means in the space, but the language corpora that are used have to come from somewhere, for large language modeling systems, the language corpora that are used are the internet as a whole, which is why you’ll hear Sundar Pichai or Sam Altman saying, We can’t do the work we do unless we can scrape everything we want from the internet. Prior to this, one of the main corpora in use was what was known as the Enron corpus. And the Enron corpus is a raft of about 600,000 emails from the Enron Corporation that were put into the public domain when the Enron Corporation was on federal trial for public corruption and defrauding their investors. So that’s exactly what you want, training a language system. I what we see as a result of this is that language modeling systems that use the training data that they are given, replicate, iterate on and exacerbate the inbuilt biases the perspectives of the data that they are trained from. And so you’ll see what seems like surprising gendered bias or racial bias or ableist bias that shows up within the system, and yet, it should not, in fact, be surprising, because those systems are trained on data, human interactions, which contains those biases within it.

8:56

At the end of the day, a large language model system, a GPT, does not care about giving you a correct answer. What these systems do is they statistically model the most likely, best acceptable result. They are, quite simply, trying to tell you a story that will jibe with your preconceptions based upon the inset the inputs that you have inserted into the system. That’s not truth. So statistically likely acceptable result is not the same thing as a fact. In fact, in philosophy, we have a word for someone or something that doesn’t care about the truth or falsity content of the speech act that it’s given; that word is bullshit.

In 2004 Harry Frankfurt wrote the book on bullshit, and in it, he talks about this idea of something or someone that does not seek to deceive you specifically, or does not seek to convey the truth specifically. In epistemology and in philosophy of language, when we talk about speech acts and we talk about truth value, what we are talking about is something very specific. If I seek to tell you the truth that is, I am seeking to tell you what I believe corroborates with the facts of the world as I understand them, right? That is me trying to tell you the truth. If I am mistaken about that truth, I have made a mistake. I have not lied to you. I have spoken in error. I have had an error in my own understanding or in my relation of my understanding to you.

To lie to you, I have to deceive you. And in order to deceive you, or to seek to deceive you, I have to tell you something which is counter to the facts as I know them. If I seek to tell you something that has countered the facts as I know them, I have to know what the facts are. I have to be able to deceive you, to move you away from those facts. Bullshit is neither of those things, and it’s not a mistake for Frankfurt, bullshitting is the process of telling a story that you don’t care whether it’s true or false, whether it conforms to the facts of the matter, or whether it does not. And for Frankfurt, this is the most dangerous, the most potentially harmful kind of speech act, because there is no goal for the bullshitter other than telling a story. And if your Tom Waits and you’re spinning a yarn, that’s one thing. But if you are trying to have a conversation about shared public values, if you’re trying to provide a system which allows for people to make decisions about their day to day life, that’s a very dangerous thing Indeed.

12:38

It is, in fact, currently the case that sometimes every member of or every head of the major generative “AI” companies right now has to some extent admitted, what is the case about generative “AI”, and that is whether you’re talking about Sundar, Pichai, Tim Cook or Sam Altman, they have all at some point in the very recent past. Let it slip that there is no way to have a large language model based “AI” system that doesn’t hallucinate. Take that in for a second, a system which is being integrated into search, a system which is being used to help people find answers, a system which is being used in every area of academia, a system which is being used by researchers, a system which is being sold to the public as the next big thing, since sliced bread or since Google Search came on the market, cannot help but bullshit you. There is no way to eliminate that practice. You can mitigate it, you can minimize it, but you will never stop it from at some point in its operation, fabricating whole cloth, something that does not necessarily conform to reality, and it will present it to you with the same certainty as it would present any other fact that does conform to reality.

14:39

There are a number of places in which we’ve seen algorithmic systems interwoven into our lives, and in all of these places, we are beginning to see generative “AI” supplemented within these spaces. Every single one of these headlines are to highlight something about the problems with “AI”, right alongside the fact that people are still rushing full steam ahead to use it. (By the way, when I say “AI”, you should imagine that I’m putting it in scare quotes every single time, and I can get into that later.) But the basic fact of the matter is we don’t know what this system is, and the word “AI”— “artificial intelligence”— as a term, has become more of a marketing tool, more than something that points to anything real or concrete. It is a moving cipher seeking a referent.

Algorithmic bias shows up in disability benefits systems and generative “AI” systems, which are being used to guide people through getting benefits, replicate the same kinds of ableist biases that we’ve seen in previous older versions of the system. Bias shows up regards to gender and who should get what kinds of health care it shows up, and it has shown up in facial recognition systems. And the “AI” that uses these facial recognition systems as the basis to make determinations about who has committed a crime, every single misidentification and erroneous prosecution of someone based on facial recognition data and an “AI” backed system of facial recognition has been a black person, because facial recognition systems don’t see dark skin well, and the “AI” systems that are used to sort through those videos are still being trained on and operating out of a basis of a repository of data about misidentification of black people. We have a raft of supposed tools that are meant to help us determine whether students are cheating on tests whether they’re using “AI” in their assignments, those GPT detection tools, the ones that are on the market that are meant to tell you whether something is “AI” or not. Yeah, they don’t, in fact, do very well with anyone whose first language isn’t English. They ping more often on non native English speakers than on English speakers, and we’ve seen the results of this when “AI” translation is used to try to translate and process applications of asylum seekers, they will have their applications more likely to be rejected when “AI” is used.

17:57

There are instances where people are trying to use “artificial intelligence” in finding homeless encampments, with the ostensible goal of directing city services to help people in need. But as we have seen in multiple places around the country, most notably, most recently in California, oftentimes the goal is to simply remove those encampments so commitments. “AI” is being used to determine what targets to hit in military conflicts.

18:37

Israel’s war against Hamas, they use what they call a system that’s known as or referred to as Lavender, or in some cases, the Gospel. And I have a whole other talk about what happens when you start to apply religious connotations to “AI” systems, and what that does in people’s minds. And those are different very connected things to what we’re talking about here today. Because what does is it certifies in people’s minds certainty. It gives them a framework of guarantee and expertise that says, This is trustworthy, this is correct, this is infallible. It is the gospel. It is math, it is code. It can’t have biases in it. Can it. And yet we see over and over and over again that it can, and it does in a theater of war, this is literally a matter of life and death, but it can be about life and death at home too.

There’s been a raft of “AI” generated mushroom foraging guides available for sale on Amazon. Let me tell you a fun thing about mushrooms.

A lot of them look very, very similar, and sometimes what you think is a mushroom that means, “Mmm, tasty dinner” is actually a mushroom that means, “oh no, I’ve liquefied my liver.” If you get a particularly poisonous and toxic mushroom, you will die, and it will hurt the entire time that you are dying. An “AI” generated mushroom guide is a generated guide that is based on images of what a mushroom is statistically supposed to look like. It is not a replication of what mushrooms actually are. It has taken every iteration of a mushroom on the internet has taken the associated tag data about that particular image of a mushroom, and has combined it all into a training data set, and has been trained to be statistically more likely to correlate those components of data in a way that the user will accept. When I’m asking it to tell me a story about how some geese went for a pleasant walk in the woods and had a delicious dinner, that’s all well and good to get a statistically-more-likely-to-be-accepted response from this system; when I might literally die or kill my family based on the results, not so much.

But this is because “artificial intelligence” systems do not care about facts. They cannot, so far as they are currently constructed, care about facts. They care about “Will you accept this result based on what you ask me? Based on how you framed the question— based on the exact words you used to send a prompt to this system— will you like what I give you as a result?” Very different; very dangerous. Not at all “facts.”

22:30

There are more subtle problems with this framework as well. We talked about the fact that the vast majority of people who are misidentified by facial recognition enabled “AI” systems were black. All of them so far have been. There’s also the problem with people thinking that some people are “AI” systems when they’re not. This connects directly to the previously noted problem of non native English speakers being misidentified by “AI” detection systems when we rely on “AI”— when we certify “AI” systems as arbiters of truth, as determinators of fact— what we are doing is we are giving over to these systems the ability to make judgments for us. We are imbuing them with heuristic capabilities that they do not possess. And when we begin as a culture as a society to rely upon them, we begin to reflexively enact the kinds of judgments that a system might make. And we begin to believe that we can see the markers of quote, unquote, “AI” where they do not exist. And when it comes to an exchange like this [Shows an image of this exchange], what we are doing is using “AI” as an intermediary to enact some of the oldest ableist bias against autistic individuals that there ever has been, and that is the bias of them seeming to us to be “robotic.”

24:33

The problem here is not and never has been that “AI” is “out of step with our values;” it’s that the values that it is in step with are very, very human, woefully under examined, and often terrible. If we want technological systems which do not depend on predatory, extractive logics which don’t replicate biases against gender, disability or race, we’re going to have to build these systems in a much more intentional, much more equitable and overarchingly just way. Otherwise, all of those biases will just get hustled in under whatever the next new big thing is to begin with, and will get used in multiple different places.

25:36

This election cycle has been a weird one. We have seen the use of generative “AI” on multiple sides of political spectra. We’ve seen the use of “AI” generated Taylor Swift Fans and Taylor Swift videos to claim endorsements for multiple candidates. Donald Trump wasn’t the first to do it. He was just the first to claim it out loud and in public.

26:10

We’ve seen “AI” generated audio being used to try to sway the decisions of voters. We’ve subsequently seen from the FCC a directive that “AI” generated audio in campaign ads is illegal. That happened very quickly. This ad went out in June or no, sorry, January. It was January 9th of this year, the FCC decision came down in February that this was no longer acceptable; and just this week, a $6 million fine came down against the person who initiated this “AI” generated Biden ad.

27:03

Oftentimes our reactions to new technologies are slow because the ways that we think about them are uncertain. They are new, they are unfamiliar. We don’t necessarily have a framework in which we can fit them. And a lot of times that slowness is intentional. The ways in which we think about these things are directed by the people who get to have a conversation about them, the people who get to drive these conversations. And so when we think about the fact that we are in a moment right now where things like diversity, equity, and inclusion are points of cultural contention, right at a time when we have to think very carefully about the effects of a diverse, more equitable, more inclusive and more just way of operating within the frame of everything from the political sphere to the technical training of the people who build our technologies, day in and day out become deeply crucial. It is somewhat telling in its own right. Because whoever controls the definition of a thing controls the conversation around that thing; whoever controls the conversation around the thing gets to shape the cultural narrative about the thing.

When Sam Altman tells you “I can’t do my job if I can’t use copyrighted material,” he is a) lying to you, but b) trying to get you to accept a particular way of thinking and operating within the framework of these technologies. When meta partners with Ray Ban to make facial recognition enabled smart glasses that they put on the market and put it every single commercial break of every streaming show you watch or every television show you put on, they are trying to get you locked into a way of thinking about these technologies, which is, in fact, not necessarily the way it has to be. But they want you to believe that it is.

It is not inevitable that these tools, that these systems, are built in the ways that they are built, but believing in their inevitability allows for the people who make the systems to tell us how it then has to be from that point forward. If they’re inevitable, then we “have” to build them. And if we build them, then our “competitors” can’t build them, or we get to have the edge over how they’re built. We get to direct the market on how they’re built, on what tools, what capabilities are available within these systems. “If Nvidia builds these chips, then we don’t have to buy them from China. If we make these chips in Ohio, we don’t have to worry about what other kind of firmware is hustled into the system.” But all of that puts this in a state of what is known as paradigmatic capture.

When you capture the paradigm, the way of thinking about the thing, from top to bottom, you get to then determine how everyone else thinks about it and deals with it from that point forward. So obfuscation, misinformation, disinformation, are all mechanisms of this kind of control.

When we think about how we construct knowledge together, we build systems of knowledge, we are thinking about these ideas of objectivity, of the fact, but we also have to think about the question of intersubjectivity, of making knowledge and our shared understanding of reality together. This is from Daston and Galison’s book objectivity, [2007], and it talks in many, many pages about the idea of how we come to think about what is and is not objective truth. What is the foundation of truth? When we think about an anatomical drawing or nature drawing of a leaf or a taxidermy representation of an animal. What is the goal of that representation? Is it perfect re-inscription of one particular animal, one particular plant, or is it a way of trying to capture something about all forms of that animal, that plant? And this is a debate that’s been alive within naturalism for centuries, quite frankly.

What is the goal of representation? If the image is there to represent one thing, then we can be very, very specific. We can be very careful. We can highlight each individual layer of fur, each distinct articulation of a claw or a paw, pad of the arc of a leg in a fox. But not every Fox has that pattern to its fur. Not every Fox has that arch to its legs. So what are we seeking to represent? When we think about a generative “AI” system, are we seeking to represent the factual truth of the matter, or are we seeking to represent in a broad way, the generally accepted perceptions of things as they tend to be recognized by people? More to the point, have tended to be recognized.

Because what we do, and every time that we use a generative “AI” system is we point to the past. Because every single “AI” system that exists uses data from the past. It is trained on things that have been and yet it is used to teach us something, or to try to teach us something about what might be— a task for which they are fundamentally not suited. If you want to look at the way that people thought about race and gender and sexuality up until even 2021, OpenAI has got the system for you. But if you want something that can tell you how things might be tomorrow, how things might be in five years from now, how things ought to be 10 years from now, that is not what these tools are for.

Expertise, expectation and the shaping of disciplinarity all go into how everybody thinks about what these tools are, what they can be, and what they should do. And reflexively, these systems shape people’s expectations about how we should think about knowledge, expertise, and disciplinarity. We can think about the social shaping of technology or social constructivism. We’ve got Melvin Krantzberg. We’ve got Karin Knorr-Cetina. We’ve got Safiya Noble. We’ve got Langdon Winner. We’ve got Thomas Kuhn, we’ve got Bruno Latour and Steve Woolgar. We’ve got Michel Foucault. We have a bunch of people who have talked about the ways in which the social implications of technology shape our understanding of these things. But the basic framework of all of this, the foundation of all of this, is that, though we embed our values in technology, we cannot simply use technology to reframe our values. We cannot Technofix our way out of values problems; because when we try weird stuff happens.

When Google reframed their Bard system to Gemini and started integrating audio, video, and images into the Google Bard system, somebody who was trying to prove a point about wokeness got a little muddled. So they were trying to prove a point, and they asked it for racially diverse Nazis. And Google’s Gemini system generated a picture of racially diverse Nazis because that’s what it was asked to do, and that’s all the system does. Is what it’s asked to do. It is not a way to mirror reality and trying to make a more equitable or more just, technology simply be the arbiter of equity and justice in our society, and with potentially deeply offensive results that do more harm than they do good.

And that brings us to a central question: Since we cannot but have values embedded in our technology, and since we have to take a close look at the values we want embedded in our technology, whose values, which values do we want embedded in our technology? You will not be able to have an unbiased “AI”. You will not be able to have a completely value free, value neutral technological system. It is not possible. We are human beings, and everything we touch, we put our perspectives into; so what values do we want in the things that we create?

There are a whole host of people who are doing a whole bunch of work on these types of systems, executive orders and new “AI” acts from places like the EU and the United States and China, which are driving the development of their “artificial intelligence” system. Intelligence system China, just to create that, there has to be a way to digitally watermark every “AI” generated piece of data that comes out of an “AI” system, or it cannot exist as a technology in China. This is something that was proposed back in 2022 as a solution to how do we know what’s “AI” and what’s not, which “AI” companies said was completely infeasible, that trained “AI” engineers said could not be easily done in just the past year, actually, in the past three months, it has been revealed that not only is it feasible, not only can it be done, it has been being done since the beginning. OpenAI has had a system to steganography, or steganographically, imprint within its texts, a marker that says, basically, this is “AI” generated text such that anything that comes out of its system is identifiable 90% of the time, absent massive editing by somebody who takes what comes out.

This is something that can be done with, something that with something as simple as word order with chosen language, things that you can weight for— and by which I mean W, E, I, G, H, T, weight for— within the model, you can alter how it chooses the words that it chooses, and you can get outputs that will always be identifiable by the system that generated it. It’s basic and inherent to how the system functions. Does it take a little extra time? Yes it does. Does it require a little bit of extra effort on the part of the engineers? Yes it does, and that’s why it was said to be infeasible, impossible, until we learn that it was done. How do we bridge the different needs and the different perspectives of the people doing this work? There are groups, some of which I work with. These are two teams that I work with at UNCC. We are working on ideas of new technologies and new ways to build these technologies. In fact, before the revelation that the steganographic techniques were being used, we were some of the first people to put forward policy positions suggesting steganographic watermarking in all “AI” products. But this isn’t the only way we can move forward.

40:24

There have to be a number of voices working together to try to expand not just the technical education, but understanding of the social and human implications of “artificial intelligence” and everything that falls under that very nebulous, empty cipher of a header.

Big wall of text: Ultimately, if we want technological systems that don’t require those predatory logics or frameworks, we have to work very hard to educate ourselves, to educate our students, to educate our communities about how these systems work, what they can and cannot, do, what they are and are not best suited for, and how we might work together to build something more equitable and more overarchingly Just in their place.

Thank you very much for your time and for your attention.

Pingback: ChatGPT is Actively Marketing to Students During University Finals Season | A Future Worth Thinking About