So with the job of White House Office of Science and Technology Policy director having gone to Dr. Arati Prabhakar back in October, rather than Dr. Alondra Nelson, and the release of the “Blueprint for an AI Bill of Rights” (henceforth “BfaAIBoR” or “blueprint”) a few weeks after that, I am both very interested also pretty worried to see what direction research into “artificial intelligence” is actually going to take from here.

To be clear, my fundamental problem with the “Blueprint for an AI bill of rights” is that while it pays pretty fine lip-service to the ideas of community-led oversight, transparency, and abolition of and abstaining from developing certain tools, it begins with, and repeats throughout, the idea that sometimes law enforcement, the military, and the intelligence community might need to just… ignore these principles. Additionally, Dr. Prabhakar was director of DARPA for roughly five years, between 2012 and 2015, and considering what I know for a fact got funded within that window? Yeah.

To put a finer point on it, 14 out of 16 uses of the phrase “law enforcement” and 10 out of 11 uses of “national security” in this blueprint are in direct reference to why those entities’ or concept structures’ needs might have to supersede the recommendations of the BfaAIBoR itself. The blueprint also doesn’t mention the depredations of extant military “AI” at all. Instead, it points to the idea that the Department Of Defense (DoD) “has adopted [AI] Ethical Principles, and tenets for Responsible Artificial Intelligence specifically tailored to its [national security and defense] activities.” And so with all of that being the case, there are several current “AI” projects in the pipe which a blueprint like this wouldn’t cover, even if it ever became policy, and frankly that just fundamentally undercuts Much of the real good a project like this could do.

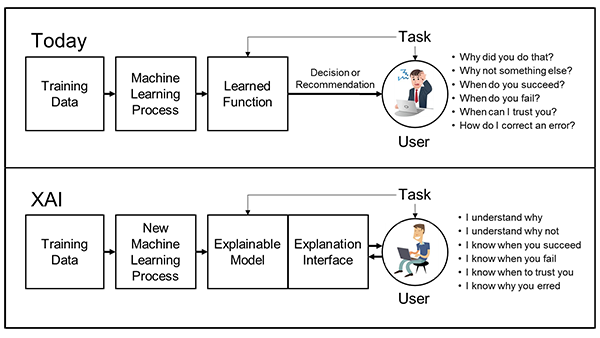

For instance, at present, the DoD’s ethical frames are entirely about transparency, explainability, and some lipservice around equitability and “deliberate steps to minimize unintended bias in Al …” To understand a bit more of what I mean by this, here’s the DoD’s “Responsible Artificial Intelligence Strategy…” pdf (which is not natively searchable and I had to OCR myself, so heads-up); and here’s the Office of National Intelligence’s “ethical principles” for building AI. Note that not once do they consider the moral status of the biases and values they have intentionally baked into their systems.

An “Explainable AI” diagram from DARPA

What I mean is, neither of these supposedly guiding, foundational documents consider questions such as how best to determine the ethical status of an event in which, e.g., someone is— or several someones are— killed by an autonomous or semi-autonomous “AI,” but one the goings on inside of which we do observe and can explain; and the explanation for what we can observe turns out to be, y’know… that the system was built on the intensely militarized goals of fighting and killing a lot of people for variously spurious reasons. Like, that is what these systems are by and large intended to be for. That’s the questions which precipitate their commissioning, the situations they’re designed to engage, and the data they’re trained to learn from in order to do it.

And because the connections in this country between what the military does and what local civilian police want to do are always tighter than we would prefer, right now, the San Francisco Police Department was recently granted and then subsequently at least temporarily blocked from exercising recently of the ability to use semi-autonomous drones to kill people in “certain catastrophic, high-risk, high-threat, mass casualty events.” Now I warned you seven years ago that this was going to happen and since then some of my stances on things have changed in terms of degree, but the core elements unfortunately haven’t. That is, while I am more strident in my belief that certain technologies should be abolished and abstained from until our society gets its shit together, I am no less sure that we are a long way from said getting together of our collective shit.

We are talking about giving institutions founded in racism the ability to deploy a semi-autonomous militarized tool to interface with and carry out the goals of a fundamentally racist technosystem. What could possibly go wrong?* J/k, so so very much is gonna go wrong, holy fucking shit it’s bad. (And yes, an interlocking system of systems doing precisely what its component parts were designed to do can, should, and must be described as “going wrong” when said system-of-systems’ perfect functioning results in people’s mass persecution and death.)

Additionally, there are the teams at InnerEye and Emotiv out of Israel and SilVal respectively, who are looking to get BCI neurochips out to the public and are specifically marketing them as on-the-job augmentations. Now, as has previously been discussed by myself and others, there are vast and dangerous implications to algorithmically mediated job-related surveillance, generally referred to as “bossware.” Whether it’s the chronic stresses of being surveilled, or the unequal pressures and oppression of said surveillance on the bodyminds of already-marginalized individuals and groups, bossware does real harm of both an immediate and long-term nature.

Now take the fact of all of that, and turn it into a chip implanted in your brain that not only monitors your uptime, but the direction of your gaze, your resting eye movements, and your endocrine response to certain stimuli. Now remember that these chips claim to be able to not just read brain-states, but to write them as well.

Here are three true things:

- BCI’s have been a dream of mine since I was a small child. The idea of being able to connect to a computer With My Mind? Has always been appealing.

- BCI could be an amazing benefit to a lot of people, in terms of being able to keep tabs on chronic disabilities or even make connected implantable devices and limbs more directly operable.

And - I will not willingly put a BCI built out of predatorily capitalist, disableist, racist, and elsewise bigoted values into my body, and any BCI built for profit and sold on market will have at least two of those biases, if not all of the above. BCI is already touted as a way to “fix” autistic people— i.e., to make them more “normal”— and that is a harmful and dangerous mentality from which to undertake the development of a technology which is literally meant to rewire people’s brains.

Add to that the immediate and contemporary fact that each of these companies uses forced labor in China to make the shit they make and makes their money by muddying and casting doubt on factual information and democratic processes the history of racist and misogynist medicalization, not to mention rampant transphobia, and it’s a recipe for really bad shit to get trumpeted as miracles in WIRED or on CNET or wherever.

Further, we have companies like Facebook deploying half-baked “AI” large language models and then claiming that the systems were “used incorrectly” when people stress test them to show exactly how systemically, prejudicially biased said models are. Yann LeCun had a several-hour-long, multi-thread argument with several “AI” researchers and ethicists who were quite frankly extremely generous in explaining to him that when people put “Meta’s” Galactica model through very simple paces to check for things like racism, antisemitism, ableism, misogyny, transphobia, or potential for abuse, that that wasn’t people “abusing” or “misrepresenting” the model. Rather it was people using the model exactly as it was billed to them: As an all-in-one “AI” knowledge set designed to distill, compile, or compose novel scientific documents out of what it’s been fed as training data and what it can search online.

That’s how it was sold by LeCun and others at “Meta,” that’s how people thought of it as they tested it out, and those datasets and the operational search, sort, and generate algorithms used in that way are what provided for a wide range of truly bad results, ranging from just gibberish to the truly and deeply disturbing.

So, again: When the most powerful interlocking corporations, militaries, intelligence agencies, and carceral systems on the planet refuse to even acknowledge the values and prejudices they’ve woven into their systems, and the potential policy guidance that would govern them gives them a free pass as long as they can show that they’re an interlocking system of capital and cops, spies, and soldiers, then what can possibly be done to meaningfully correct their course? (And also let me ask again, as I’ve asked many times before: Even if these systems ever do anything like what their creators claim, rather than merely being nothing but a hype-soaked fever dream, are these really the people and groups we want building these tools and systems, to begin with?)

So, yes: The BfaAIBoR contains lots of the right-sounding words and concepts, and those words and concepts could facilitate some truly beneficial sociocultural and socioeconomic impacts arising from the BfaAIBoR and out into the “AI” industry and the rest of us who are in relational context with and subject to the systems that industry produces. But unfortunately it contains none of the real and meaningfully actionable frameworks or mechanisms which would allow them to come to fruition. So like I said: I’m intrigued, but also worried.

And so, with all of that being said, it’s important to note that this “Blueprint For An AI Bill Of Rights” isn’t law or even a set of real intragovernmental policy directives yet; it’s just a (very) preliminary whitepaper. And since that is the case, it means that two further things are true:

- As I learned in my time at SRI International, many firms take whitepapers very seriously, as it gives them direct lines into the kinds of concept structures their potential funding agencies are going to be looking for; that will also undoubtedly be the case with this blueprint. And so

- Now is absolutely the very best time for those of us who care about this sort of thing to really make some serious noise about it— to help enact meaningful changes to its structure and ideas— before it has a chance to become official policy.

That is, it will be far easier to get beneficial, meaningful, and substantive oversight, regulatory, and even just values-based changes made now, than it will be to try to make them once the BfaAIBoR or something else very similar to it is fully in place. And we need to do this as soon as possible because, as they’ve shown us all time and time again, these groups really absolutely cannot be counted on to adequately regulate and police themselves. Like I said: R&D firms scope out even preliminary governmental whitepapers, looking for guidance as to how best to appeal to their potential funders; and the thing to remember about that is that lacunae and loopholes are a form of guidance, too.

A document like the Blueprint should be created, applied, enacted, and adjudicated under the supervision of those who know the shape of damage these tools can cause; it should be at the direction of those who know what these systems can do and have done that the rules for building these systems are written. It should be in the care of those who have been most often subject to and are thus most acutely aware of the oppressive, marginalizing nature of the technosocial systems that make up our culture— and who work to envision them otherwise.

December 7, 2022: This post was updated with the results of the more recent vote by the San Francisco Board of Supervisors to “pause” the SFPD’s use of semi-autonomous lethal drones.