We do a lot of work and have a lot of conversations around here with people working on the social implications of technology, but some folx sometimes still don’t quite get what I mean when I say that our values get embedded in our technological systems, and that the values of most internet companies, right now, are capitalist brand engagement and marketing. To that end, I want to take a minute to talk to you about something that happened, this week and just a heads-up, this conversation is going to mention sexual assault and the sexual predatory behaviour of men toward young girls.

So here’s the fucking thing: An increasing number of text-integrated platforms have begun incorporating a “Suggested Responses” feature, whether that means Gmail’s autocomplete suggestions (or even their whole-cloth short sentence responses) or Facebook’s “Suggested Emoji’s” on mobile. Thing of it is, the algorithms that run these are, yes, learning, but certainly not yet robust or well-formed, especially in Facebook’s case. I say especially Facebook because, while Gmail has figured out how to achieve something like a suitable range of tone and tenor for their preloaded email responses, Facebook’s suggested range of emojis just offered me a range of heart-eyes, heart, fire, and other fanatically supportive options on a story about the sexual assault of an 17 year-old girl.

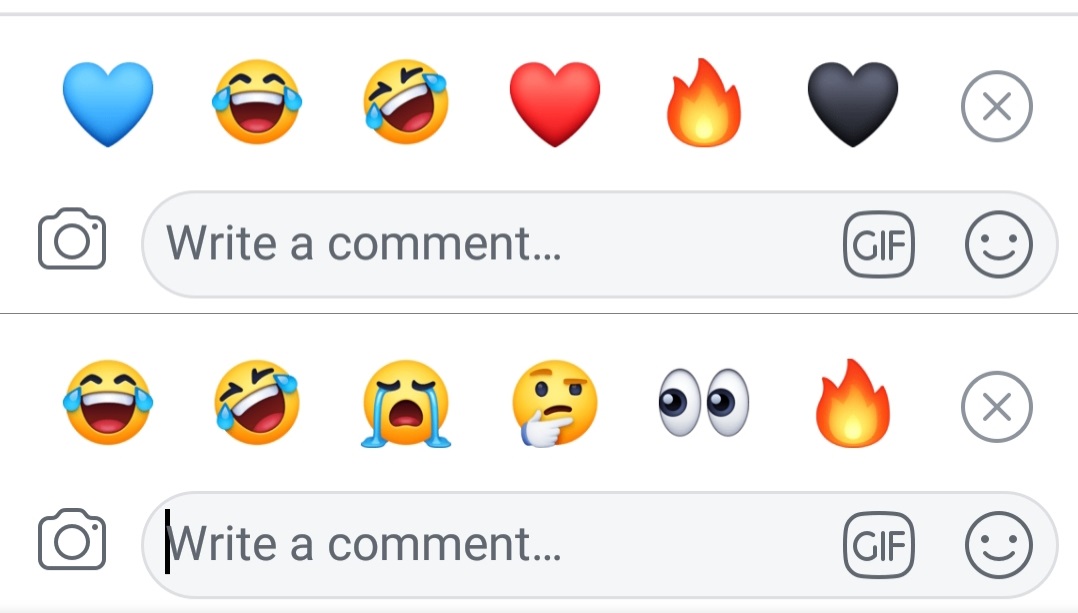

[Two rows of emojis (not the original inciting options) above respective comment entry fields on FB Mobile: Top Row Emojis: Blue Heart; Cry Laugh; Hysterical Cry Laugh; Heart; Fire; Black Heart Bottom Row Emojis: Cry Laugh; Hysterical Cry Laugh; Weeping; Hmmm; Eyes, Fire]

And why did it give me this range, you might ask? Well, I don’t have direct access to Facebook’s algorithms (and if they have their way, i likely never will), but knowing what we tend to know, I can guess that it suggested these emojis because the Perpetrator of said assault was a famous person whose name is very frequently responded to with that range and tone of emojis, and the assault in question took place at one of his concerts.

No, not that guy. The other guy.

To clarify, the fame and adoration of the perpetrator—the brand—is given greater weight and precedence in the algorithm over and above the the fact that the story was about his assault of a 17 year-old girl. The Facebook emoji suggestion algorithm learned that “Drake+Concert=Extreme Joy And Adoration Emojis” even when in context with “Story About Sexual Assault.” And if you think about Facebook’s reinforcement techniques for your timeline, wherein you are increasingly likely to see the kind of content you’ve engaged with, before, then this makes perfect sense.

Facebook wants you to click and like and fire emoji and heart-eyes emoji or whatever it is that makes you more likely to engage with their advertising partners and generate revenue for Facebook. I’ve got a book chapter coming out soon where I put it like this:

And how was it trained? By exposure to Facebook’s network patterns and the behavior of people in them—networks which Facebook has been notoriously reticent to moderate for racist, disableist, sexist, xenophobic, transphobic, homophobic, or otherwise bigoted content. When humans train a system via uncritical pattern-recognition protocols applied to that system’s continual exposure to bigoted datasets, humans will get a bigoted system. When humans then teach that bigoted system to weight its outputs for use in a zero-sum system like capitalist marketing and advertising, where every engagement is a “good” engagement, the system will then exacerbate that bigotry in an attempt to generate the most engagement. Once that’s done, the capitalist advertising-driven system made of the biases and bigotries it learned from humans will sell the amplified iterations of those biases and bigotries back to those humans. Then, the assessment of how those humans engage with those iterations (remembering, of course, that any click is a good click) will, again, inform the system’s next modulations. And then the cycle begins again.

That is what i mean, and that is why all of this is important.