Hello from the Southern Blue Mountains of These Soon To Be Re-United States.

I realised that, for someone whose work and life and public face is as much about magic as mine is, I haven’t done a lot of intention- and will-setting, here. That is, I haven’t stated and formulated with a clear mind and intention what I want and will work to bring into existence.

Now, there are a lot of magical schools of thought that go in for the power of setting your intention, abstracting it out from yourself, and putting it into the universe as a kind of unconscious signal. Sigilizing, “let go and let god,” that whole kind of thing.

But there’s also something to be said for just straight-up clearly formulating the concepts and words to state what you want, and fixing your mind on what it will take to achieve. So here’s what I want, in 2019.

I want more people to understand, accept, and actualize the truth of Dr Safiya Noble’s statement that “If you are designing technology for society, and you don’t know anything about society, you are deeply unqualified” and Deb Chachra’s corollary that “Whether you realise it or not, the technology you’re designing *is* for society.” So what’s that mean to me? It means that I want technologists, designers, academics, politicians, and public personalities to start taking seriously the notion that we need truly interdisciplinary social-science- and humanities-focused programs at every level of education, community organization, and governance if we want the tools and systems which increasingly influence and shape our lives built, sustained, and maintained in beneficial ways.

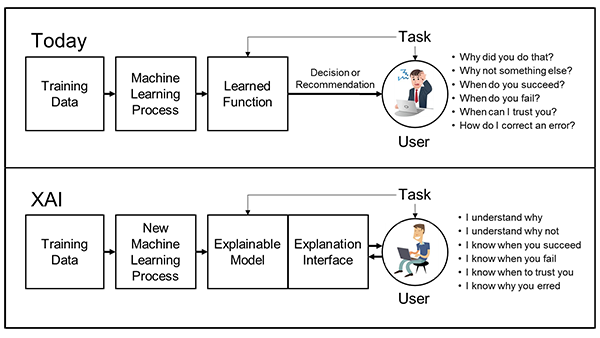

And what do I mean by “beneficial,” here? I mean want these systems to not replicate and iterate on prejudicial, bigoted human biases, and I want them to actively reduce the harm done by those things. I mean I want tools and systems crafted and laws drafted not just by some engineer who took an ethics class once or some politician who reads WIRED, every so often, but by collaborative teams of people with interoperable kinds of knowledge and lived experience. I mean I want politicians recognizing that the vast majority of people do not in fact understand google’s or facebook’s or amazon’s algorithms or intentions, and that that is, in large part, because the people in charge of those entities do not want us to understand them.

I want people to heed those who try to trace a line from our history of using new technologies and new scientific models and stance to marginalize wide swathes of people, and I want those people who understand and research that to come together and work on something different, to build systems and carve paths that allow us to push back against the entrenched, deep-cut, least-resisting, lowest-common-denominator shit which constitutes the way that power and prejudice and assumptions thereof (racism, [dis-]ableism, sexism, homophobia, misogyny, fatphobia, xenophobia, transphobia, colourism, etc.) act on and shape and are the world in which we live.

I want us all to deconstruct and resist and dismantle the oppressive bullshit in our lives and the lives of those we love, and I want to build techno-socio-ethico-political systems built on compassion and care and justice and an engaged, intentional way of living. I want to be able to clearly communicate the good of that. I want people to easily understand the good in that, and understand the ease of that good, if we take the strengths of our alterities, our differing phenomenologies, our experiential othernesses, and respect them and weave them together into a mutually supportive interoperable whole.

I want to publish papers about these things, and I want people to read and appreciate them. I want to clearly demonstrate the linkages I see and make them into a compelling lens through which to act in the world.

I want to create beauty and joy and refuge and shelter for those who need it and I want to create a deep, rending, claws-and-teeth-filled defense against those who would threaten it, and I want those billion-toothed maws and gullets and the pressure of those thousand-thousand-thousand eyes to act as a catalyst for those who would be willing to transform themselves. I want to build a community of people who are safe and cared-for and who understand the value of compassion, and understand that sometimes compassion means you burn a motherfucker to the ground.

I want to strengthen the bonds that need strengthening, and I want the strength to sever any that need severing. I want, as much as possible, for those those to be the same, for me, as they are for the other people involved.

I want to push back as meaningfully as we still can against the worst of what’s coming from what humans have done to this planet’s climate, and I want to do that from the position of safeguarding the most vulnerable among us, and I want to do so with an understanding that whatever we do, Right Now, is just a small interim step to buy us enough time to do the really hard systemic shit, as we move forward.

I want people to realize their stock options won’t stop them from suffocating to death in the 140°F/60°C heat and I want people to realize that there’s no money in Heaven and that even if there was, from all I read, that Joshua guy and his Dad don’t take too kindly to people who hurt the poor and marginalized or who wreck the place they were told to steward.

I want people to realise that the people who need to realize those things are the same sets of people.

I want a clear mind and a full heart and the will to take care of myself enough to keep trying to help make these things happen.

I want you happy and healthy and whole, however you define that for you.

I want Alexandria Ocasio-Cortez to begin what will be a five-year process of digging deep into both the communities she represents and the DC machinery (It has to be both). (And then I want her to get a place in the cabinet of whatever left-leaning progressive is in the White House in 2020, and help them win again in 2024. And then I want her to win in 2028.)

I want every person in a position of power to realized that they need to consult and heed the people and systems over whom they have power, to truly understand their needs.

I want everyone to have their individual and collective basic survival needs met so they can experience what that does for the scope of their desires and what they believe is possible.

I want the criminal indictment of the (at time of this writing) Current Resident of the Oval Office and every high level politician who enabled his position. I want the people least likely to understand and accept why this is necessary to quickly and fully understand and accept that this is necessary.

I want a just and kind and compassionate world and I want to be active within it.

I want to know what you want.

So what do you want?