-Human Dignity-

The other day I got a CFP for “the future of human dignity,” and it set me down a path thinking.

We’re worried about shit like mythical robots that can somehow simultaneously enslave us and steal the shitty low paying jobs we none of us want to but all of us have to have so we can pay off the debt we accrued to get the education we were told would be necessary to get those jobs, while other folks starve and die of exposure in a world that is just chock full of food and houses…

About shit like how we can better regulate the conflated monster of human trafficking and every kind of sex work, when human beings are doing the best they can to direct their own lives—to live and feed themselves and their kids on their own terms—without being enslaved and exploited…

About, fundamentally, how to make reactionary laws to “protect” the dignity of those of us whose situations the vast majority of us have not worked to fully appreciate or understand, while we all just struggle to not get: shot by those who claim to protect us, willfully misdiagnosed by those who claim to heal us, or generally oppressed by the system that’s supposed to enrich and uplift us…

…but no, we want to talk about the future of human dignity?

Louisiana’s drowning, Missouri’s on literal fire, Baltimore is almost certainly under some ancient mummy-based curse placed upon it by the angry ghost of Edgar Allan Poe, and that’s just in the One Country.

Motherfucker, human dignity ain’t got a Past or a Present, so how about let’s reckon with that before we wax poetically philosophical about its Future.

I mean, it’s great that folks at Google are finally starting to realise that making sure the composition of their teams represents a variety of lived experiences is a good thing. But now the questions are, 1) do they understand that it’s not about tokenism, but about being sure that we are truly incorporating those who were previously least likely to be incorporated, and 2) what are we going to do to not only specifically and actively work to change that, but also PUBLICIZE THAT WE NEED TO?

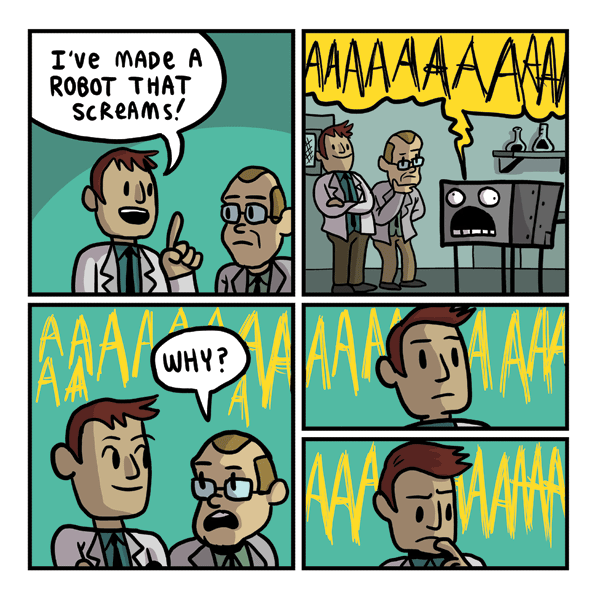

These are the kinds of things I mean when I say, “I’m not so much scared of/worried about AI as I am about the humans who create and teach them.”

There’s a recent opinion piece at the Washington Post, titled “Why perceived inequality leads people to resist innovation,”. I read something like that and I think… Right, but… that perception is a shared one based on real impacts of tech in the lives of many people; impacts which are (get this) drastically unequal. We’re talking about implications across communities, nations, and the world, at an intersection with a tech industry that has a really quite disgusting history of “disruptively innovating” people right out of their homes and lives without having ever asked the affected parties about what they, y’know, NEED.

So yeah. There’s a fear of inequality in the application of technological innovation… Because there’s a history of inequality in the application of technological innovation!

This isn’t some “well aren’t all the disciplines equally at fault here,” pseudo-Kumbaya false equivalence bullshit. There are neoliberal underpinnings in the tech industry that are basically there to fuck people over. “What the market will bear” is code for, “How much can we screw people before there’s backlash? Okay so screw them exactly that much.” This model has no regard for the preexisting systemic inequalities between our communities, and even less for the idea that it (the model) will both replicate and iterate upon those inequalities. That’s what needs to be addressed, here.

Check out this piece over at Killscreen. We’ve talked about this before—about how we’re constantly being sold that we’re aiming for a post-work economy, where the internet of things and self-driving cars and the sharing economy will free us all from the mundaneness of “jobs,” all while we’re simultaneously being asked to ignore that our trajectory is gonna take us straight through and possibly land us square in a post-Worker economy, first.

Never mind that we’re still gonna expect those ex-workers to (somehow) continue to pay into capitalism, all the while.

If, for instance, either Uber’s plan for a driverless fleet or the subsequent backlash from their stable—i mean “drivers” are shocking to you, then you have managed to successfully ignore this trajectory.

Completely.

Disciplines like psychology and sociology and history and philosophy? They’re already grappling with the fears of the ones most likely to suffer said inequality, and they’re quite clear on the fact that, the ones who have so often been fucked over?

Yeah, their fears are valid.

You want to use technology to disrupt the status quo in a way that actually helps people? Here’s one example of how you do it: “Creator of chatbot that beat 160,000 parking fines now tackling homelessness.”

Until then, let’s talk about constructing a world in which we address the needs of those marginalised. Let’s talk about magick and safe spaces.

-Squaring the Circle-

Speaking of CFPs, several weeks back, I got one for a special issue of Philosophy and Technology on “Logic As Technology,” and it made me realise that Analytic Philosophy somehow hasn’t yet understood and internalised that its wholly invented language is a technology…

…and then that realisation made me realise that Analytic Philosophy hasn’t understood that language as a whole is a Technology.

And this is something we’ve talked about before, right? Language as a technology, but not just any technology. It’s the foundational technology. It’s the technology on which all others are based. It’s the most efficient way we have to cram thoughts into the minds of others, share concept structures, and make the world appear and behave the way we want it to. The more languages we know, right?

We can string two or more knowns together in just the right way, and create a third, fourth, fifth known. We can create new things in the world, wholecloth, as a result of new words we make up or old words we deploy in new ways. We can make each other think and feel and believe and do things, with words, tone, stance, knowing looks. And this is because Language is, at a fundamental level, the oldest magic we have.

Lewis Carroll tells us that whatever we tell each other three times is true, and many have noted that lies travel far faster than the truth, and at the crux of these truisms—the pivot point, where the power and leverage are—is Politics.

This week, much hay is being made is being made about the University of Chicago’s letter decrying Safe Spaces and Trigger Warnings. Ignoring for the moment that every definition of “safe space” and “trigger warning” put forward by their opponents tends to be a straw man of those terms, let’s just make an attempt to understand where they come from, and how we can situate them.

Trauma counseling and trauma studies are the epitome of where safe space and trigger warnings come from, and for the latter, that definition is damn near axiomatic. Triggers are about trauma. But safe space language has far more granularity than that. Microggressions are certainly damaging, but they aren’t on the same level as acute traumas. Where acute traumas are like gun shots or bomb blasts (and may indeed be those actual things), societal micragressions are more like a slow constant siege. But we still need the language of a safe spaces to discuss them—said space is something like a bunker in which to regroup, reassess, and plan for what comes next.

Now it is important to remember that there is a very big difference between “safe” and “comfortable,” and when laying out the idea of safe spaces, every social scientist I know takes great care to outline that difference.

Education is about stretching ourselves, growing and changing, and that is discomfort almost by definition. I let my students know that they will be uncomfortable in my class, because I will be challenging every assumption they have. But discomfort does not mean I’m going to countenance racism or transphobia or any other kind of bigotry.

Because the world is not a safe space, but WE CAN MAKE IT SAFER for people who are microagressed against, marginalised, assaulted, and killed for their lived identities, by letting them know not only how to work to change it, but SHOWING them through our example.

Like we’ve said, before: No, the world’s not safe, kind, or fair, and with that attitude it never will be.

So here’s the thing, and we’ll lay it out point-by-point:

A Safe Space is any realm that is marked out for the nonjudgmental expression of thoughts and feelings, in the interest of honestly assessing and working through them.

“Safe Space” can mean many things, from “Safe FROM Racist/Sexist/Homophobic/Transphobic/Fatphobic/Ableist Microagressions” to “safe FOR the thorough exploration of our biases and preconceptions.” The terms of the safe space are negotiated at the marking out of them.

The terms are mutually agreed-upon by all parties. The only imposition would be, to be open to the process of expressing and thinking through oppressive conceptual structures.

Everything else—such as whether to address those structures as they exist in ourselves (internalised oppressions), in others (aggressions, micro- or regular sized), or both and their intersection—is negotiable.

The marking out of a Safe Space performs the necessary function, at the necessary time, defined via the particular arrangement of stakeholders, mindset, and need.

And, as researcher John Flowers notes, anyone who’s ever been in a Dojo has been in a Safe Space.

From a Religious Studies perspective, defining a safe space is essentially the same process as that of marking out a RITUAL space. For students or practitioners of any form of Magic[k], think Drawing a Circle, or Calling the Corners.

Some may balk at the analogy to the occult, thinking that it cheapens something important about our discourse, but look: Here’s another way we know that magick is alive and well in our everyday lives:

If they could, a not-insignificant number of US Republicans would overturn the Affordable Care Act and rally behind a Republican-crafted replacement (RCR). However, because the ACA has done so very much good for so many, it’s likely that the only RCR that would have enough support to pass would be one that looked almost identical to the ACA. The only material difference would be that it didn’t have President Obama’s name on it—which is to say, it wouldn’t be associated with him, anymore, since his name isn’t actually on the ACA.

The only reason people think of the ACA as “Obamacare” is because US Republicans worked so hard to make that name stick, and now that it has been widely considered a triumph, they’ve been working just as hard to get his name away from it. And if they did mange to achieve that, it would only be true due to some arcane ritual bullshit. And yet…

If they managed it, it would be touted as a “Crushing defeat for President Obama’s signature legislation.” It would have lasting impacts on the world. People would be emboldened, others defeated, and new laws, social rules, and behaviours would be undertaken, all because someone’s name got removed from a thing in just the right way.

And that’s Magick.

The work we do in thinking about the future sometimes requires us to think about things from what stuffy assholes in the 19th century liked to call a “primitive” perspective. They believed in a kind of evolutionary anthropological categorization of human belief, one in which all societies move from “primitive” beliefs like magic through moderate belief in religion, all the way to sainted perfect rational science. In the contemporary Religious Studies, this evolutionary model is widely understood to be bullshit.

We still believe in magic, we just call it different things. The concept structures of sympathy and contagion are still at play, here, the ritual formulae of word and tone and emotion and gesture all still work when you call them political strategy and marketing and branding. They’re all still ritual constructions designed to make you think and behave differently. They’re all still causing spooky action at a distance. They’re still magic.

The world still moves on communicated concept structure. It still turns on the dissemination of the will. If I can make you perceive what I want you to perceive, believe what I want you to believe, move how I want you to move, then you’ll remake the world, for me, if I get it right. And I know that you want to get it right. So you have to be willing to understand that this is magic.

It’s not rationalism.

It’s not scientism.

It’s not as simple as psychology or poll numbers or fear or hatred or aspirational belief causing people to vote against their interests. It’s not that simple at all. It’s as complicated as all of them, together, each part resonating with the others to create a vastly complex whole. It’s a living, breathing thing that makes us think not just “this is a thing we think” but “this is what we are.” And if you can do that—if you can accept the tools and the principles of magic, deploy the symbolic resonance of dreamlogic and ritual—then you might be able to pull this off.

But, in the West, part of us will always balk at the idea that the Rational won’t win out. That the clearer, more logical thought doesn’t always save us. But you have to remember: Logic is a technology. Logic is a tool. Logic is the application of one specific kind of thinking, over and over again, showing a kind of result that we convinced one another we preferred to other processes. It’s not inscribed on the atoms of the universe. It is one kind of language. And it may not be the one most appropriate for the task at hand.

Put it this way: When you’re in Zimbabwe, will you default to speaking Chinese? Of course not. So why would we default to mere Rationalism, when we’re clearly in a land that speaks a different dialect?

We need spells and amulets, charms and warded spaces; we need sorcerers of the people to heal and undo the hexes being woven around us all.

-Curious Alchemy-

Ultimately, the rigidity of our thinking, and our inability to adapt has lead us to be surprised by too much that we wanted to believe could never have come to pass. We want to call all of this “unprecedented,” when the truth of the matter is, we carved this precedent out every day for hundreds of years, and the ability to think in weird paths is what will define those who thrive.

If we are going to do the work of creating a world in which we understand what’s going on, and can do the work to attend to it, then we need to think about magic.